For the past 5.5 years I’ve been head-down in the exciting area of stream processing and events, and I realised recently that the world of data and analytics that I worked in up to 2017 which was changing significantly back then (Big Data, y’all!) has evolved and, dare I say it, matured somewhat - and I’ve not necessarily kept up with it. In this series of posts you can follow along as I start to reacquaint myself with where it’s got to these days.

Background

Twenty years ago (😱TWENTY😱) I took my first job from university using my 🎼 Music degree to…build data warehouses. I was lucky to get a place on a graduate scheme at a well-established retailer with an excellent training programme. I got taught COBOL, DB2, TSO, and all that fun stuff. I even remember my TSO handle - TSO954. Weird huh. From there I continued in the data space, via a stint as a DBA on production OLTP systems, and into more data warehousing and analytics with Oracle in 2010.

From the mid 2010s I became aware of the Big Data ecosystem emerging, primarily around Apache Hadoop and Cloudera (anyone remember Data Is The New Bacon?). All the things I’d been used to doing with analytical data suddenly became really difficult. Query some data? Write Java code. Update some data? Haha nope. Use SQL? hah, welcome to this alpha release that probably doesn’t work. And BTW, if you didn’t know it before, now you truly know the meaning of JAR hell.

Snark aside, I spent some time looking at some of the tools and building out examples of its use in 2016, before moving jobs into my current role at Confluent.

At Confluent I’ve been working with Apache Kafka and diving deep into the world of stream processing, including building streaming data pipelines. When I took on the role of chair of the program committee for Current 22 part of the remit was to help source and build a program that included elements across the broader data landscape than Kafka alone. In doing this, I realised quite how much had changed in recent years, and gave me an itch to try and make some sense of it.

Herewith that itch being scratched…

How It Started

It’s hard to write a piece like this without invoking The Four Yorkshiremen at some point, so I’ll get it out of the way now. But back in my day my starting point for what is nowadays called <Data|Analytics> Engineering is what pretty much everyone—bar the real cool kids in silicon valley—was doing back in the early 2010s (and the previous ~decades before that):

-

Find a source system - probably just one, or maybe two. Almost certainly a mainframe, Oracle, flat files.

-

ETL/ELT the data into a target Data Warehouse or Data Mart, probably with a star or snowflake schema

-

Build dashboards on the data

It was maybe not as neat as this, with various elements of "shadow IT" springing up to circumvent real [or perceived] limitations - but at least you knew what you should be doing and aspiring for.

Extracts were usually once a day. Operational Data Stores were becoming a bit trendy then along with 'micro-batch' extracts which meant data coming maybe more frequently, e.g. 5-10 minutes

Tools were the traditional ETL lot (Informatica, Data Stage, etc), with Oracle’s Data Integrator (neé Sunopsis) bringing the concept of ELT in which instead of buying servers (e.g. Informatica) to run the Transform, you took advantage of the target database’s ability to crunch data by loading the data into the database in one set of tables, transform it there, and load it into another set of tables. Nowadays ELT is not such a clear-cut concept (for non-obvious reasons)

Data modeling was an important part, as was the separation of logical and physical at both the ELT stage (ODI) and reporting (e.g. OBIEE’s semantic layer).

Source control in analytics was something that the hipsters did. Emailing around files and using CODE_FILE_V01, CODE_FILE_V02, CODE_FILE_PROD_RELESE!_V3 was seen as perfectly acceptable and sufficient. Even if you wanted to, it was often difficult.

Data Warehouses tended to live on dedicated platforms including Teradata, Netezza, as well as the big RDBMSs such as Oracle, DB2, and SQL Server.

Scaling was mostly vertical (buy a bigger box, put in more CPUs). Oracle’s Exadata was an engineered system and launched with the promise of magical performance improvements with a combination of hardware and software fairy dust to make everything go quick.

The idea of building around open source software was not a commonplace idea for most companies of any size, and Cloud was in the early phases of the hype cycle.

Analytics work was centralised around the BI (Business Information) or MIS (Management Information Systems) teams, or going back a bit further the DSS (Decision Support System) team. Source systems owners would grudgingly allow an extract from their systems, with domain model specifics often left to the analytics team to figure out.

How It’s Going

First, a HUGE caveat: whereas the above is written based on my experience as a "practitioner" (I was actually doing and building this stuff), what comes next is my perception from conversations, my Twitter bubble, etc. I would be deeply pleased to be corrected on any skew in what I write.

Where to start?

The use of data has changed. The technology to do so has changed. The general technical competence and willingness to interact with IT systems has changed.

Data is no longer used just to print out on a report on a Monday morning. Data is used throughout companies to inform and automate processes, as described by Jay Kreps in his article Every Company is Becoming a Software Company.

Handling of data is done by many teams, not just one. In the new world of the Data Mesh even source system owners are supposed to get in on the game and publish their data as a data product. Outside of IT, other departments in a company are staffed by a new generation of "technology native" employees - those who grew up with the internet and computers as just as much a part of their worlds as television and the telephone. Rather than commissioning a long project from the IT department they’re as likely to self-serve their requirements, either building something themselves or using a credit card to sign up for one of the thousands of SaaS platforms.

The data engineering world has realised—if not fully accepted—that software engineering practices including testing, CI/CD, etc are important - see this interesting discussion on the "shift left" of data engineering.

Hardware is not limited to what you can procure and provision in your data center through a regimented and standardised central process, but whatever you can imagine (and afford) in the Cloud. Laptops themselves are so powerful that much processing can just be done locally. And the fact that it’s laptops is notable - now anyone can work anywhere. The desktop machine in the office is becoming an anachronistic idea except for specialised roles such as video processing. That mobility in its adds considerations to how technology serves us too.

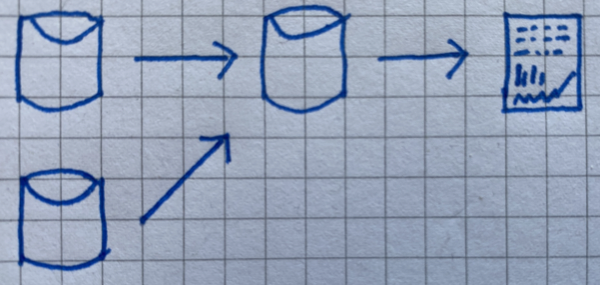

Both SeattleDataGuy in his article The Baseline Data Stack and James Densmore in his book Data Pipelines Pocket Reference describe a basic approach to moving data into a place from which it can be analysed. Build a pipeline to do a batch extract of data from the source system into a target store from which it can be worked on. No streaming, no fancy tooling - just good ole' ETL. Same as we saw above, right? RIGHT?

Well not really. Because instead of one or two sources, there are probably dozens if not hundreds. Instead of one central data warehouse there will be numerous data stores. And rather than just a set of static reports to print out for a Monday morning, the data is being used in every corner of the business on every device and platform you can imagine.

All Change, Please

The world of software has been blown apart, driven in my opinion by the internet, Cloud, and open source.

Never mind placing an order for software and waiting for the installation media to arrive. The world of software is at our disposal and just a download link away. With Docker you can spin up and try a dozen data stores in a day and pick the one that suits you best. Cloud gives you the ability to provision capacity on which to run whatever you’d like (IaaS), or as is widely the case provision the software itself (SaaS). They host it, they support it, they tune it - all you do it use it. Companies no longer have to choose simply between paying IBM for a mainframe license or Microsoft for a Windows licence, but whether to pay at all. Linux went from being a niche geek interest to the foundation on which a huge number of critical systems run. Oracle is still a dominant player in the RDBMS world but you’re no longer an oddity if you propose to use Postgres instead.

And speaking of a dozen data stores, nowadays there are stores specialised for every purpose. NoSQL, oldSQL, someSQL, NewSQL and everywhere in between. Graph, relational, and document. AWS in particular has leant into this, mirroring what’s available to run yourself in their plethora of SaaS offerings in the data space.

Job Titles

In terms of job titles, back in the day you were often a programmer, a datawarehouse specialist, a BI analyst, and all and many titles in between. Nowadays you have people who get the actual value out of the data that pays for all of this to happen, and they might still be called Analysts of one flavour or another but more often Data Scientists. This overlaps and bleeds into the ML world too. For a few years the people who got the data for the analysts to work with were Data Engineers (modelling the Software Engineers that "programmers" of old had become). It seems to me that this label has split further, with Data Engineering being the discipline of getting the data out of the source, building the pipelines to get it into some kind of staging area (e.g. data lake). From here the Analytics Engineers take over, cleansing and perhaps restructuring it into a form and schema that is accessible and performant for the required use.

Where to Start?

So there is a lot to cover, even if I were to just summarise across all of it. There are seventy seven sub-categeries alone in Matt Turck’s useful survey of the landscape (pdf / source data / article) — and that’s from 2 years ago 😅 (lakeFS published something similar for 2022).

What I’m going to do is dig into some of the particular areas that have caught my eye, which is generally those closest related to the developments in the specific area of my interest - data engineering for analytics purposes. Even then I’m sure I’ll miss a huge swath of relevant content.

Footnote: So What?

You may be wondering what is even the purpose of this blog? There’s no "call to action", no great insight. And that’s fine - this is just my notes for myself, and if they’re of interest to anyone else then they are welcome to peruse them :)